This minute-long clip of a Will Smith concert is blowing up online for all the wrong reasons, with people accusing him of using AI to generate fake crowds filled with fake fans carrying fake signs. In the last day, the story’s been covered by Rolling Stone, VIBE, NME, Cosmopolitan, The Daily Mail, The Independent, Mashable, and Consequence of Sound.

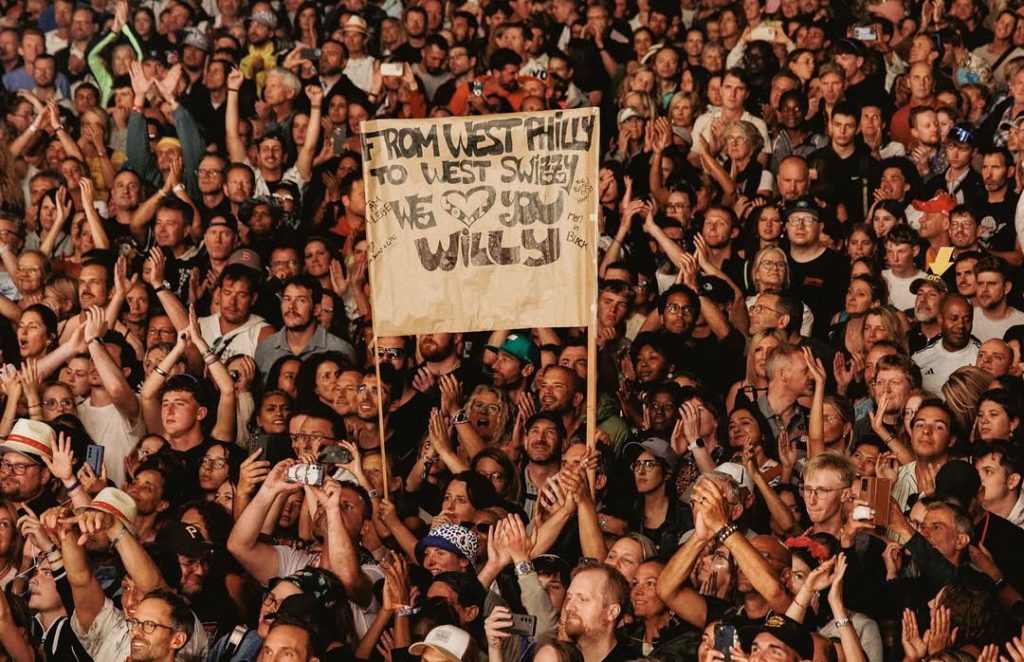

And it definitely looks terrible! The faces have all the characteristics of AI slop, with familiar artifacts like uncanny features, smeared faces, multiple fingers/limbs, and nonsensical signage. “From West Philly to West Swig̴̙̕g̷̤̔͜y”?

But here’s where things get complicated.

The crowds are real. Every person you see in the video above started out as real footage of real fans, sourced from multiple Will Smith concerts during his recent European tour.

Real Crowds, Real Signs

The main Will Smith performance in the clip is from the Positiv Festival, held last month at the Théâtre Antique d’Orange in Orange, France. (Here’s a phone recording from the audience of the first half of the performance.) It’s intercut with various shots of audiences from the Gurtenfestival and Paléo festivals in Switzerland and the Ronquieres Festival in Belgium, among others.

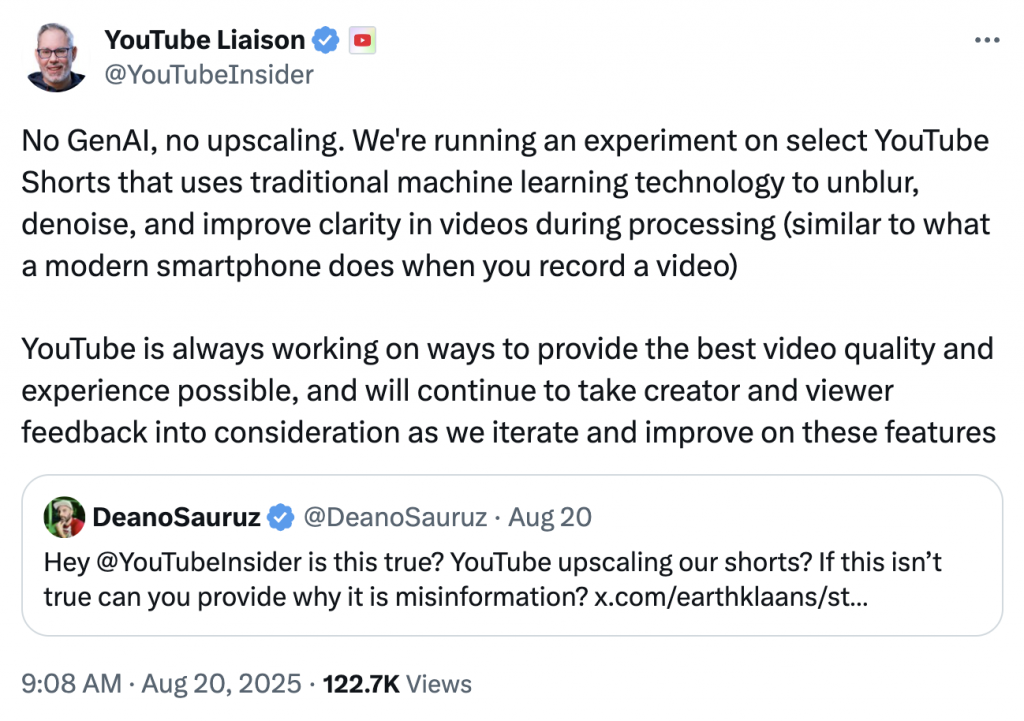

From this slideshow of photos from Paléo, you can see professionally-shot photos of the same audience from the video.

The signs, previously distorted, can now be read, like this one which actually reads “From West Philly to West Swizzy.” (Short for Switzerland, if you’re wondering.)

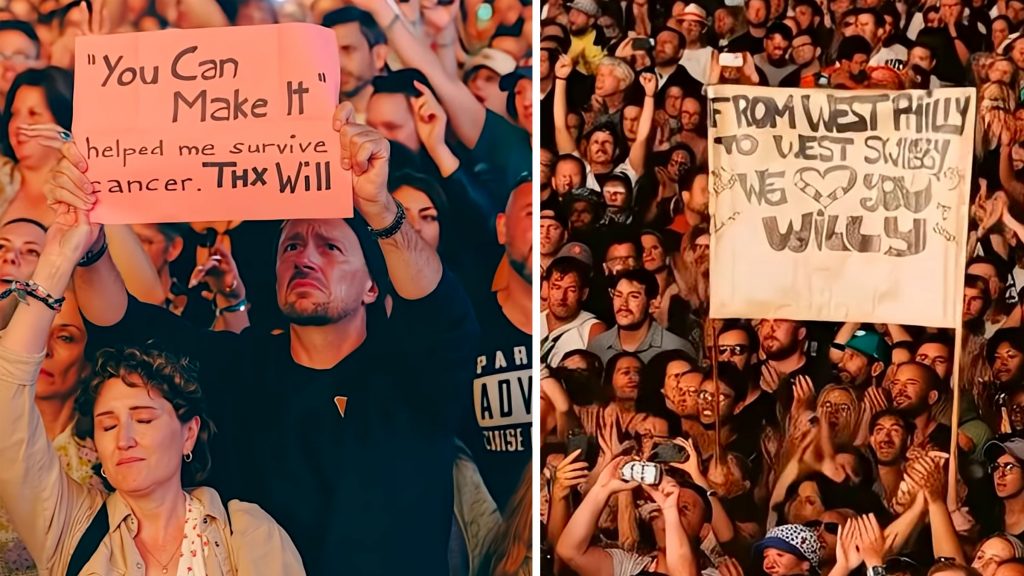

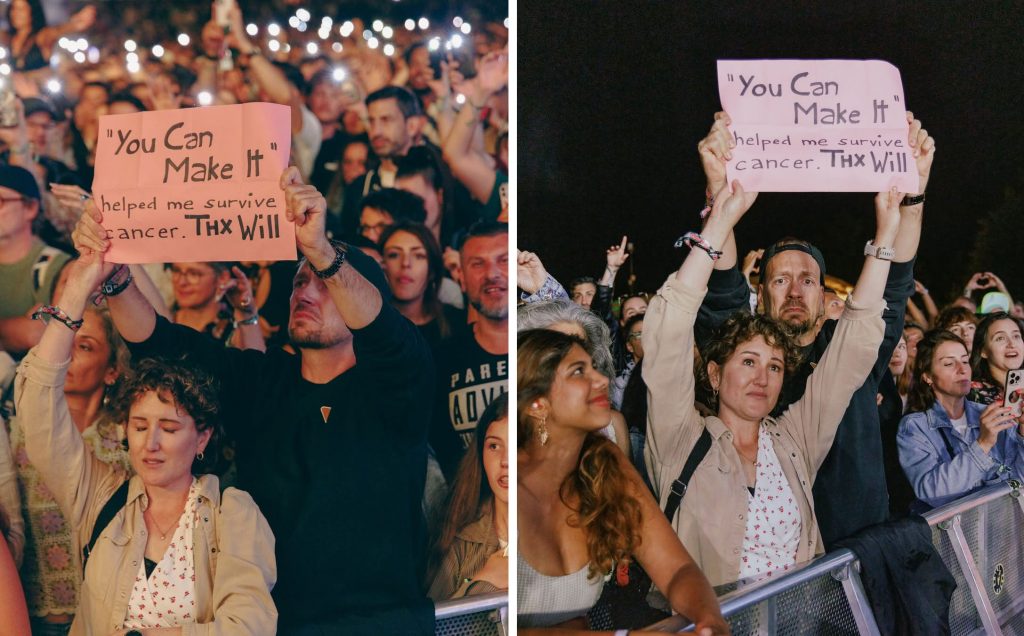

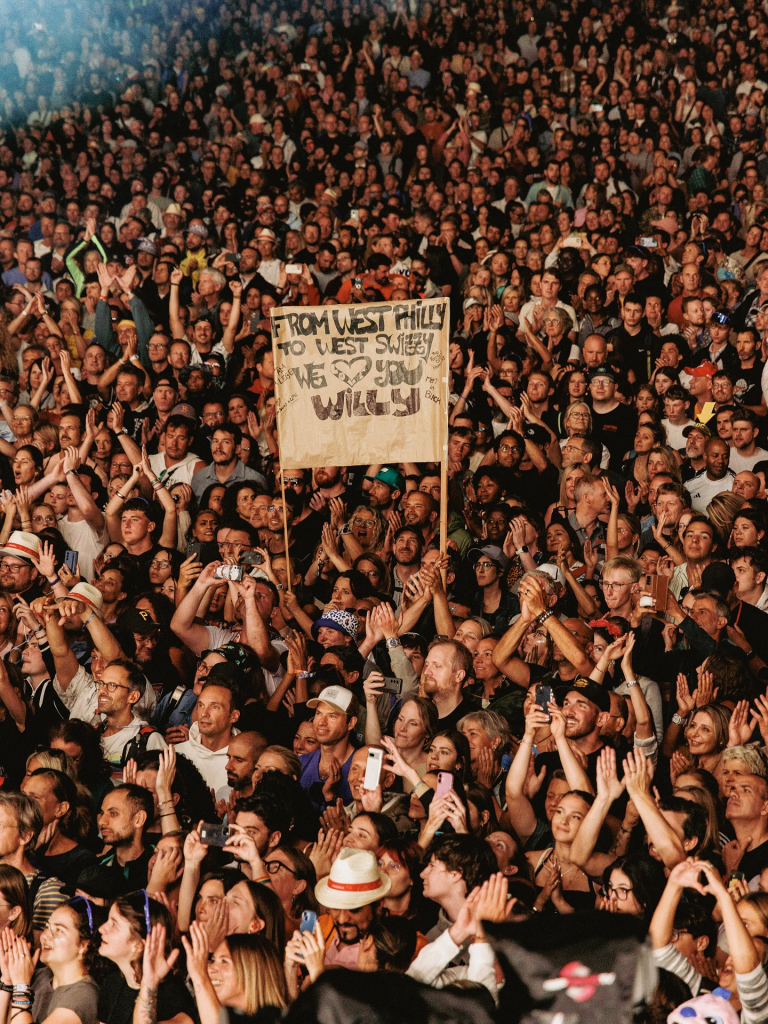

One of the most egregious examples is the couple holding the sign thanking Will Smith for helping them survive cancer which — if it was AI-generated slop — would be pretty disgusting: a gross attempt to drum up sympathy with fake people.

In an article posted by The Independent today, music editor Roisin O’Connor points to the couple as clear evidence of AI generation:

“Another shot shows a man’s knuckle appear to blur along with his sign, which reads ‘You Can Make It’ helped me survive cancer. THX Will.’ Meanwhile, the woman in front of him is seemingly holding his hand, but the headband of the woman behind her is somehow over her wrist.”

But the couple is real. There’s two good photos of them on Will Smith’s Instagram in a slideshow of photos and videos from Gurtenfestival in Bern last month.

You can see them in this video from Will Smith’s Instagram post, which I clipped below.

Two Levels of AI Enhancement

So if these fans aren’t AI-generated fakes, what’s going on here?

The video features real performances and real audiences, but I believe they were manipulated on two levels:

- Will Smith’s team generated several short AI image-to-video clips from professionally-shot audience photos

- YouTube post-processed the resulting Shorts montage, making everything look so much worse

Let’s start with YouTube.

YouTube’s Shorts “Experiment”

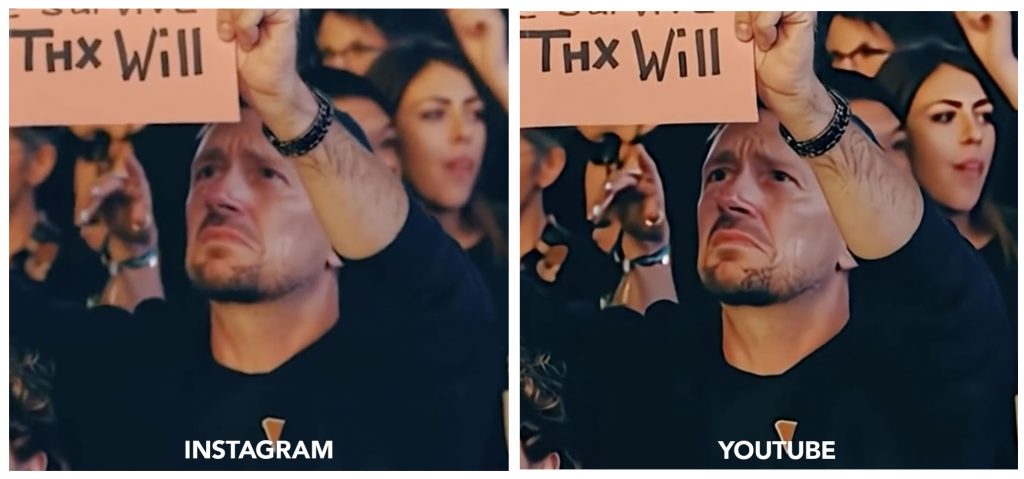

Will Smith’s team also uploaded this same video to Instagram and Facebook, where it looks considerably better than the copy on YouTube, without the smeary sheen of uncanny detail. Every line is sharper and more exaggerated, especially noticeable in crowd shots with facial features and previously out-of-focus fans.

I put the videos side-by-side below. Try going full-screen and pause at any point to see the difference. The Instagram footage is noticeably better throughout, though some of the audience clips still have issues.

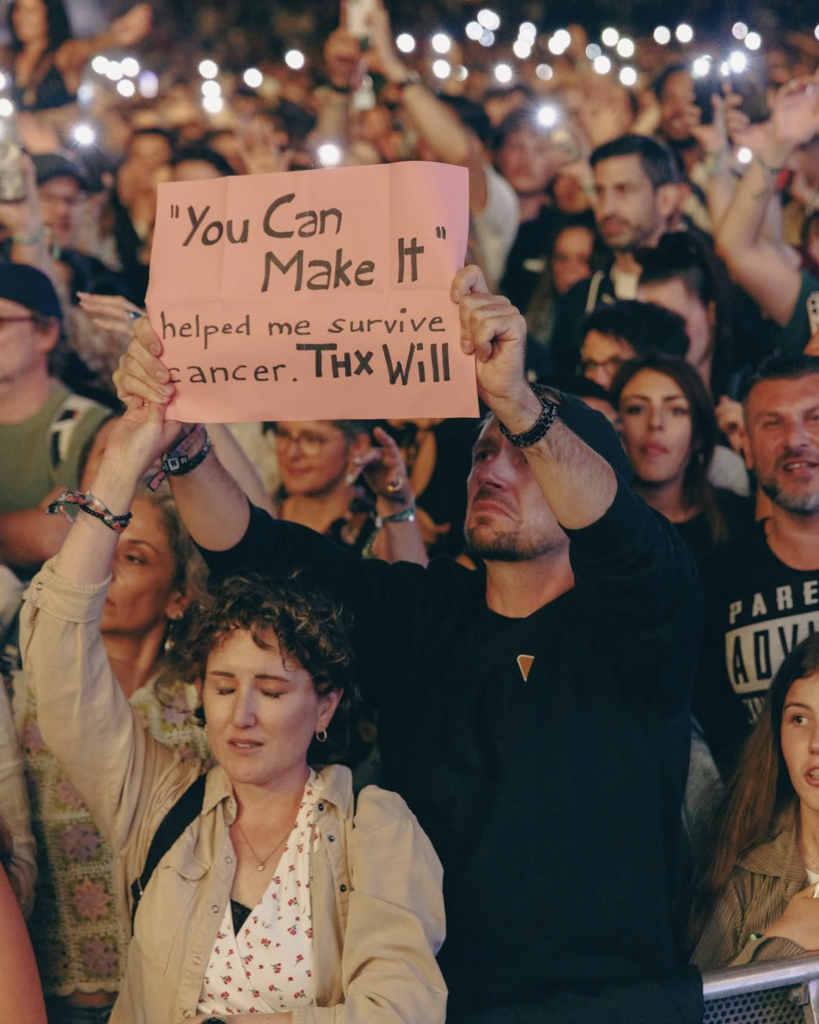

For the last two months, it turns out that YouTube was quietly experimenting with post-processing YouTube Shorts videos: unblurring and denoising videos with often-unpleasant results.

I first heard about this ten days ago, when guitarist Rhett Shull posted a great video about the issue, which now has over 700k views.

Five days ago, YouTube finally confirmed it was happening. YouTube’s Creator Liaison Rene Ritchie posted on X about the experiment.

In a followup reply, Ritchie clarified the difference, as he saw it:

GenAI typically refers to technologies like transformers and large language models, which are relatively new. Upscaling typically refers to taking one resolution (like SD/480p) and making it look good at a higher resolution (like HD/1080p). This isn’t using GenAI or doing any upscaling. It’s using the kind of machine learning you experience with computational photography on smartphones, for example, and it’s not changing the resolution.

On Friday, Alex Reisner wrote about “YouTube’s Sneaky AI ‘Experiment’” in The Atlantic, and got another official statement from Google:

When I asked Google, YouTube’s parent company, about what’s happening to these videos, the spokesperson Allison Toh wrote, “We’re running an experiment on select YouTube Shorts that uses image enhancement technology to sharpen content. These enhancements are not done with generative AI.” But this is a tricky statement: “Generative AI” has no strict technical definition, and “image enhancement technology” could be anything. I asked for more detail about which technologies are being employed, and to what end. Toh said YouTube is “using traditional machine learning to unblur, denoise, and improve clarity in videos,” she told me.

(Update: On Tuesday morning, Rene Ritchie announced on X that YouTube was working on an opt-out for the Shorts filter.)

Will Smith’s Generated Videos

That explains why the entire YouTube Shorts video has that smeary look to it that isn’t present throughout the copy posted on Instagram, but both versions have those terrible audience shots with AI artifacts and garbled signage.

After looking at it, I believe that Will Smith’s team was using a generative video model — but not to create entirely new audience footage, like most people suspect.

Instead, they started with photos shot by their official tour photographers, and used those photos in Runway, Veo 3, or a similar image-to-video model to create a short animated clip suitable for a concert montage.

Let’s go back to the crowd photo from Paléo in Switzerland:

I believe this is the exact photo that the crowd shot in the video was generated with. Here it is as a two-frame animation, with the first frame from the AI video overlaid on the original photo.

Here’s another example. The photo below was taken at Ronquieres Festival in Belgium, posted to Will Smith’s Instagram three weeks ago.

And here’s the AI-generated clip that it was turned into.

Finally, here’s the original photo of that couple.

And the short video clip that the photo was animated with using an image-to-video AI model.

Conclusion

Virtually all of the commenters on YouTube, Reddit, and X believe this was fake footage of fake fans, generated by Will Smith’s team to prop up a lackluster tour.

Like the faces in the video, the truth is blurry.

The crowds were real, but the videos were manipulated: first by Will Smith’s team, and then without asking, by YouTube.

We can debate the ethics of using an image-to-video model to animate photos in this way, but I think it’s meaningfully different than what most people were accusing Will Smith of doing here: using generative AI video to fake a sold-out crowd of passionate fans.

Update: I tracked down the couple in the video holding the sign about surviving cancer.

Thank you for this breakdown! It makes a lot more sense now. The manner is which AI is being used across the net for the most part is inherently anti-human, imho.

I didn’t ask youtube to “fix” my uploads.

Thanks for the comparisons, that makes everything clear.

Great article. Found this linked on a twitter post. Journalism like this is important. People need to stay vigilant and look beyond the surface level for the truth in an age of clickbait and media driven hysteria.