Over at the Expert Labs blog, I did some digging into the unusually large response to the President’s first tweet on @whitehouse during the Twitter Town Hall. In the process, I played around using Twitter Lists as tags, some phrase analysis, and more fun with charts.

I’m cross-posting it below, for posterity. Hope you enjoy it!

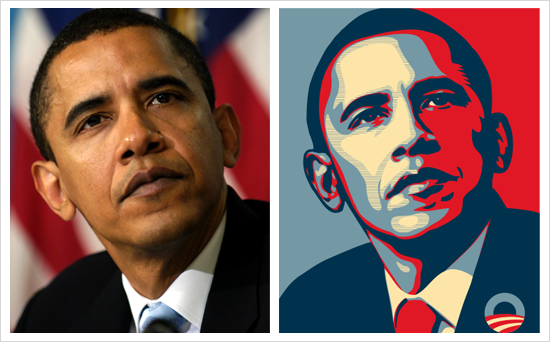

During the Twitter Town Hall collaboration with the White House, President Obama posted a single tweet to @whitehouse, asking this question:

This was historic for two reasons: it was the first time that a President has ever posted directly to a social network from the White House. Second, it was the first time the President’s directly asked for feedback from users of a social network.

There was some great analysis of the Twitter Town Hall activity, including TwitSprout’s infographics and Radian6’s detailed postmortem on Wednesday. Both focused on the #askobama questions that were asked before and during the Town Hall. Using ThinkUp’s data collecting responses to the President’s first tweet, I’d like to focus specifically on responses to the President’s question above.

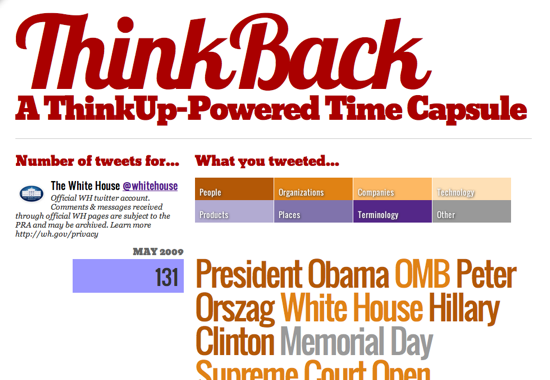

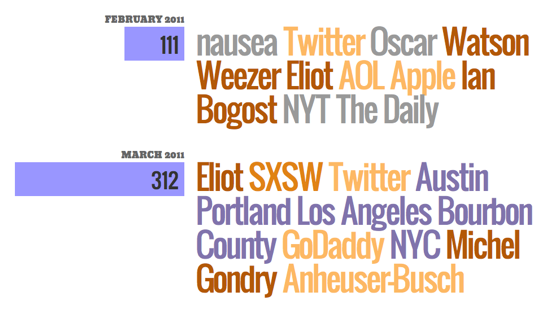

We’ve been using ThinkUp to archive and analyze the White House’s Twitter account since May 1, 2009 and, as we’ve shared before, have gathered a pretty amazing corpus for analysis. With that, it’s useful to see how people responded to this new kind of personal, inquisitive behavior relative to past activity.

The short version: the response to the President’s tweet drew more than three times the number of responses as the nearest runner-up, and more than six times more replies than anything posted in the last year. There were over 1,850 responses to his deficit question, topping the two Grand Challenges questions from April 2010 combined. You can see them all on the White House’s ThinkUp.

By comparison, the chart below shows the top ten most-replied tweets since the White House started using Twitter.

| Replies | Tweet | Date |

|---|---|---|

| 1,857 | in order to reduce the deficit,what costs would you cut and what investments would you keep – bo | 2011 July 6 |

| 583 | What Grand Challenge should be on our Nation’s to-do list? Reply w/your idea now! http://bit.ly/dy9fkL #whgc | 2010 April 14 |

| 461 | The next Apollo program or human genome project? Respond w/a Grand Challenge our Nation should address: http://bit.ly/b1Fyq9 #whgc | 2010 April 12 |

| 286 | The President, VP, national security team get updated on mission against Osama bin Laden in the Sit Room, 5/1/11 http://twitpic.com/4si89t | 2011 May 2 |

| 200 | Today, there are over 20k border patrol agents — double the number in 2004. Send thoughts on #immigration reform our way. | 2011 May 7 |

| 172 | Obama’s long form birth certificate released so that America can move on to real issues that matter to our future http://goo.gl/fNmdR | 2011 April 27 |

| 165 | President Obama just presented a parody movie trailer @ the #WHCD Enjoy: http://www.youtube.com/watch?v=508aCh2eVOI | 2011 May 1 |

| 150 | Reply to us w/ your questions for top WH policy folks, we’ll take some in our online panel right after #SOTU at http://wh.gov | 2011 January 25 |

| 129 | President Obama on the phone with President Hosni Mubarak of Egypt in the Oval Office, VP Biden listens http://twitpic.com/3ubl1u | 2011 January 29 |

| 122 | President Obama on Libya: “I refused to wait for the images of slaughter and mass graves before taking action” Full video: http://wh.gov/aDC | 2011 March 29 |

It’s worth noting that eight out of the top 10 most replied were all posted in the last six months, suggesting that the White House’s New Media team is increasing its effectiveness in engaging its audience on Twitter, even as that audience grows.

This tweet was the most effective the White House has ever been at drawing a behavior response from its followers. This is interesting, because it differs from typical tweets in two ways:

- It is personal (using the President’s “- bo” signature)

- It asks a concrete question

While some of the response could be attributed to the focus on the Twitter event, it’s likely that continuing this question-answer process with a personal touch leads to deeper and richer engagement.

Who talks to @whitehouse?

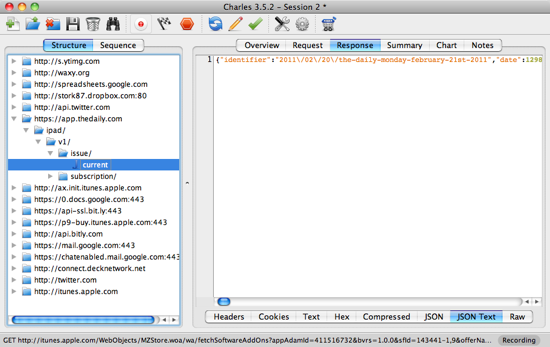

To help determine the subject expertise for each of the respondents, I used the Twitter API to retrieve the lists that each person belonged to. My hope was that the list names could act as tags, like on Delicious or Flickr, to help group and categorize individuals.

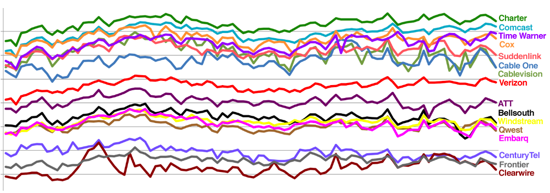

Because lists are often used for personal use, the most frequently-used list names include some unhelpful ones like “friends” and “people,” but many can be used as useful categories like “politics,” “writers,” and “tech.” Here’s a Wordle of the top 100 most frequently used.

Lists that @whitehouse responders belong to

These lists let us examine the responses from different facets. Here are the top five responses from people most tagged with “politics” or “political”:

| mommadona | mommadona | .@whitehouse War, as a political tool, is no longer an option in the 21st Century. Make it so. #ASKOBAMA #dem #p2 #p21 |

| lheal | Loren Heal | @whitehouse Investments? You mean spending. When the government spends, it crowds out private investment rather than encouraging it. |

| tlanehudson | Lane Hudson | @whitehouse Fair tax based on ability to pay. End war spending. |

| SeamusCampbell | Seamus Campbell | @whitehouse Police forces for each cabinet-level department #askobama |

| lisalways | lisalways | @whitehouse Peacetime defense should be cut, minimize war in Afg & end soon. Stop Bush Tax cuts. Push hard for advance on infrastructure |

Compare that to people tagged with “tech” or “technology”:

| colonelb | David Britten | @whitehouse Eliminate the federal department of education and return education to the states. #askobama |

| sharonburton | Sharon Burton | @whitehouse Health and education are the conditions for prosperity. Cut tax benefits to corporations. They benefit from the conditions. |

| TotalTraining | Total Training | @whitehouse less international support and wars more focus on domestic concerns like education for the young and old |

| atkauffman | andrew kauffman | @whitehouse costs need to be those that citizens do not need, loopholes, high costs of congress etc investments in learning and CHILDREN |

As you’d expect, the responses are very different from people tagged “green”:

| LynnHasselbrgr | Lynn Hasselberger | @whitehouse cut defense, big oil subsidies, tax extension on wealthiest, corp tax loopholes. Invest in teachers + cleanenergy #AskObama |

| CBJgreennews | Susan Stabley | . @whitehouse Will you support the end of government subsidies for oil and energy companies, esp. those that have record profits? #askObama |

| ladyaia | Susan Welker, AIA | @whitehouse Money given to farmers of GMO products and more support of organic farmers. Our health costs would be reduced by better food. |

| SmartHomes | Daniel Byrne(Smarty) | @whitehouse jobs and budget fix: massive release of oil from strategic reserve to lower oil price. Effect: No cost stimulus package 4 every1 |

| dcgrrl | DC Grrl | @whitehouse I would definitely cut subsidies to energy companies, and I’d keep infrastructure and education investments. #askobama |

It’s surprising how useful these results are, considering how limited Twitter Lists are exposed throughout the interface. This suggests that Twitter List memberships can be a useful measure of determining a user’s authority in subject areas, which we’ll be looking into for ThinkUp.

The Answers

When asked where to reduce spending, 479 people (about 25%) included some variation of “war,” “defense” or “military.” Other popular suggestions included raising taxes/ending the Bush-era tax cuts (11%) and tax subsidies for oil companies and farming (6%). People seemed to be evenly split between those who want to protect Medicare and Social Security and those who want to see it overhauled.

With regards to where to invest for the future, the most popular was education, with about 17% of responses including terms like “education,” “school” or “teachers.” 6% want to see renewed investments in energy, 5% on infrastructure projects, and 2% in health care. (Surprisingly, only 15 people mentioned decriminalizing marijuana.)

For the full set of responses, you can browse them all on ThinkUp. Or, if you like, the entire dataset is available on Google Docs or embedded below.

Conclusion

For us, it’s been fascinating to see an American President use social media to directly ask questions and get answers. We hope other government agencies are taking note of how powerful the combination of a direct question, authentic voice, and an audience can be for democracy. And these lessons extend to the private sector, as well: every company can learn how to better interact with their community from this national experiment in democracy.

The next step, of course, is to make sure those answers are useful enough to inform decision-making. If our representatives are listening, and people feel they’re being heard, everyone benefits.

We’re happy for people to reuse our findings. Any questions about these results can be addressed to [email protected].