Ello launched on August 7, 2014 with big dreams and big promises, a new social network defined by what it wouldn’t do.

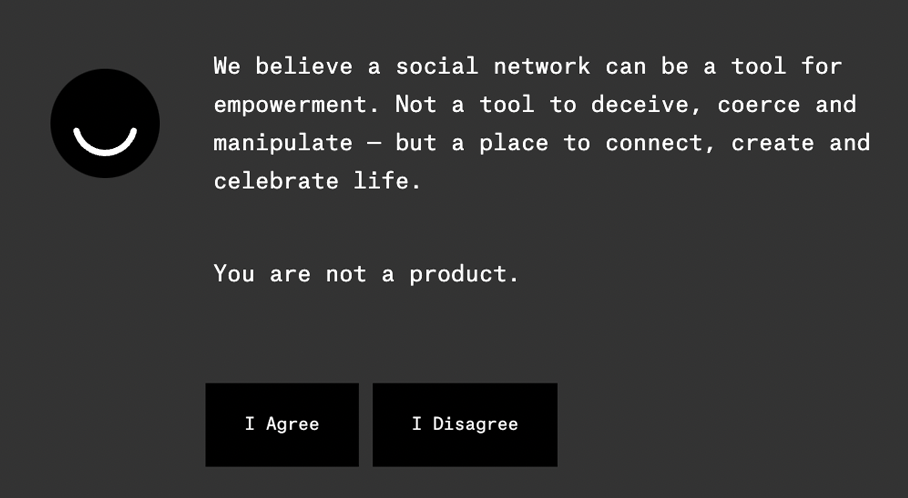

They laid it all out in a manifesto, right on their homepage:

Your social network is owned by advertisers.

Every post you share, every friend you make and every link you follow is tracked, recorded and converted into data. Advertisers buy your data so they can show you more ads. You are the product that’s bought and sold.

We believe there is a better way. We believe in audacity. We believe in beauty, simplicity and transparency. We believe that the people who make things and the people who use them should be in partnership.

We believe a social network can be a tool for empowerment. Not a tool to deceive, coerce and manipulate — but a place to connect, create and celebrate life.

You are not a product.

From its launch, Ello defined itself as an alternative to ad-driven social networks like Twitter and Facebook. “You are not a product.” (The “I Disagree” button linked to Facebook’s privacy page.)

I’d link to that manifesto on Ello’s site, but I can’t, because Ello is dead.

In June 2023, the servers just started returning errors, making nine years of member contributions inaccessible, apparently forever — every post, artwork, song, portfolio, and the community built there was gone in an instant.

How did this happen? What happened between the idealistic manifesto above and the sudden shutdown?

It’s a story so old and familiar, I predicted it shortly after Ello launched.

Continue reading “The Quiet Death of Ello’s Big Dreams”