Two weeks ago, the server I host all my personal projects on was hacked by some guy in Ukraine. It really sucked.

I was overdue for a redesign anyway.

I first noticed something was amiss while trying to post a link here and the server was unusually slow. I SSHed in and the server was slow to respond, as if system resources were being consumed by a runaway process.

A quick ‘top’ revealed that MySQL was pegging the CPU, so I logged into the MySQL console and saw that a dump of the database was being written out to a file. This was very unusual: I never schedule database backups in the middle of the day, and it was using a different MySQL user to make the dumps.

Then I noticed where the mysqldump was being written to: the directory for a theme from a WordPress installation I’d set up the previous month, an experiment to finally migrate this blog off of MovableType.

This set off all my alarms. I immediately shut down Apache and MySQL, cutting off the culprit before they could download the dumped data or do any serious damage.

I’d recently updated to the latest WordPress beta, and saw that the functions.php file in the twentysixteen theme directory was replaced with hastily-obfuscated PHP allowing arbitrary commands to be run on my server through the browser.

This confirmed all my lingering unease about running WordPress, built up over a decade of hearing horror stories of friends and acquaintances getting hacked–but that stereotype of WordPress security was outdated and wrong, and led me to make a very stupid, very serious blunder.

I moved the WordPress install, along with the hacked PHP and aborted mysqldump, to my local machine and deleted it from my server. I looked through the logs to see what else they’d been up to, and convinced I’d closed the hole by removing WordPress, eventually started my server back up to minimize downtime.

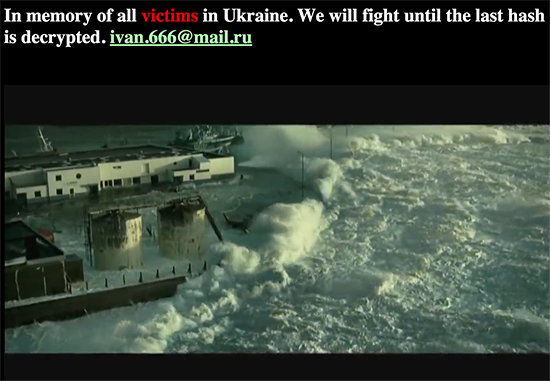

The next day, “Ivan” dropped every database in MySQL, deleted my blog, and replaced it with this pseudo-political polemic he’s used on other compromised sites.

(As an aside, the embedded YouTube video is this dubstep remix of the Requiem for a Dream theme by Clint Mansell and Kronos Quartet, misattributed to Hans Zimmer. Your guess is as good as mine.)

How It Happened

After going through every log file, and with the help of Gary Pendergast from the WordPress security team, I assembled a minute-by-minute timeline of what happened.

Our friendly hacker first appeared in the logs on the Waxy.org homepage, running a vulnerability scan testing thousands of different URLs to find possible vectors of attack. And it finds one, a copy of PHPMyAdmin that I apparently installed in 2002 and forgot about it entirely.

He tried to sign in briefly, but failed, so starts looking for other PHP scripts on the server using a simple Google query for “site:waxy.org inurl:php”. This turns up half a dozen results, with one that looks promising — a project I did in 2005 to visualize a data dump that Boing Boing released to commemorate their fifth birthday.

He starts an open-source toolkit called SQLMap to probe the script for SQL injection holes, it quickly finds one, and uses it to own the database.

In the database, he sees a database for WordPress from the installation I mentioned earlier. He fires up a third vulnerability scanner called WPScan to search for WordPress vulnerabilities, but it’s not clear if he finds any.

Either way, it’s not necessary — with access to MySQL, the culprit can add himself a WordPress admin and sign in. Immediately, he uses the WordPress theme editor to install malware PHP to the theme, allowing him to execute arbitrary commands on the server. Just in case, he writes copies of the malware PHP to three more locations outside of the WordPress installation in case it’s deleted.

So, after I removed access to WordPress, he was still able to get to the malware needed to own the box. Eventually, he grows bored and deletes the database and everything on Waxy.org.

Comedy of Errors

Fortunately, I had a database backup from earlier that morning, and a recent backup of all files. I killed all services on the server, and started the long process of restoring sites carefully, one by one, with modern security practices in mind.

But this was easily one of the most miserable, stressful experiences of my life. Yesterday, I woke up in the middle of the night with a cold-sweat nightmare that I was hacked again.

I had a PTSD-ish nightmare that my server was hacked again, this time from an exploit in Postfix. Stupid lingering stress.

— Andy Baio (@waxpancake) December 9, 2015

And it was so avoidable, born from laziness and complacency. Let’s go through the highlights of bad security practices:

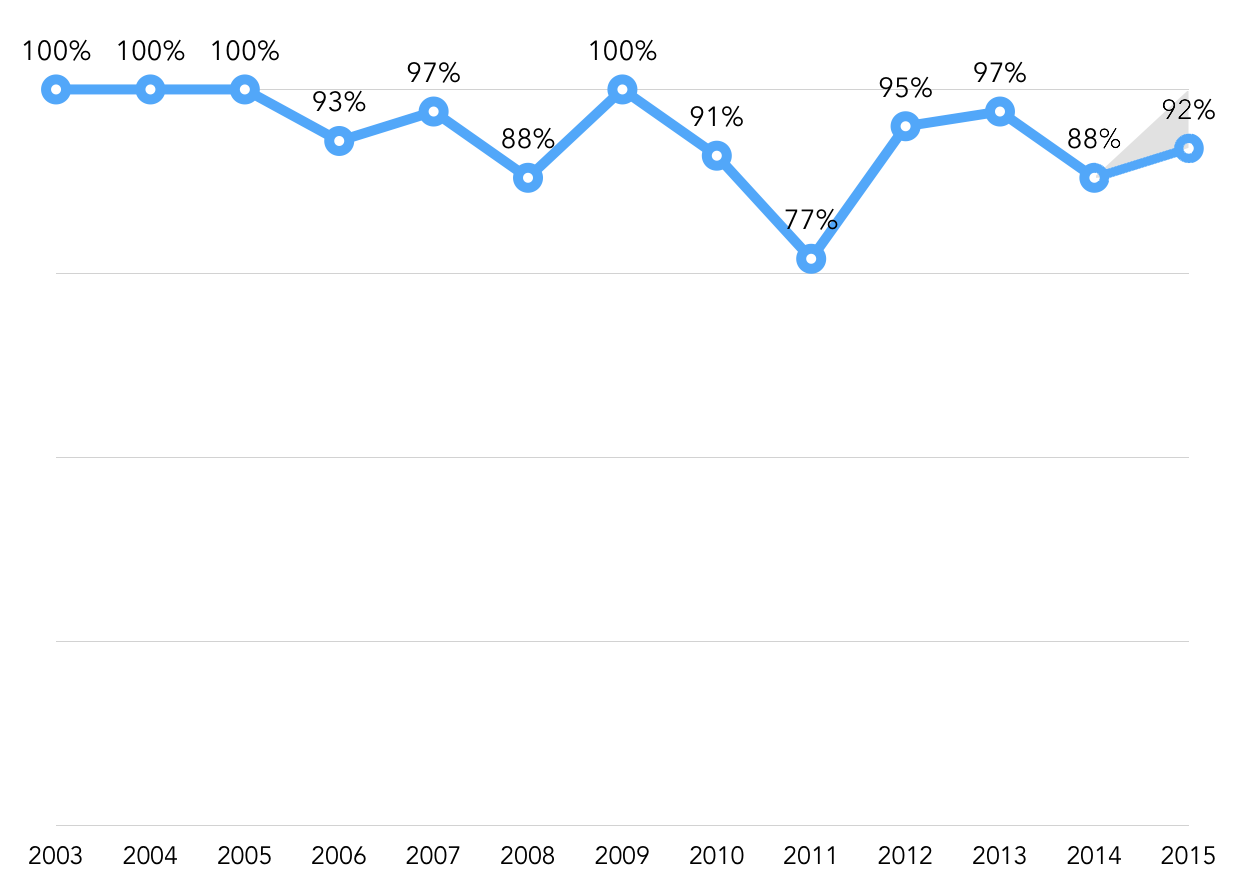

- My old server at Softlayer was running continuously for eight straight years, since December 2007, and there was code carried over from previous servers dating back to 2002.

- The Boing Boing Stats was a throwaway PHP hack that sat untouched for a decade on multiple servers with a glaring SQL injection hole. And, hell, I didn’t even know that ancient copy of PHPMyAdmin installation was still hanging around.

- I was using a shared MySQL user account for nearly every project running on the server, which had near-universal permission to delete records or drop databases entirely. Plus, it allowed for remote connections. So bad.

- I played loose with file permissions, giving the Apache user the ability to write to far more than it should have.

- I was running Centos 5, but not keeping up-to-date with security updates.

- Critically, I wasn’t running any software to monitor and ban vulnerability scans or alert me to malicious activity.

And that’s just scratching the surface of issues relevant to this hack. I was still using password-based logins with SSH, root logins were available, MySQL passwords were weak… Frankly, it’s amazing I wasn’t hacked earlier.

Righting Wrongs

If there’s a bright side to any of this, it’s that it gave me a long-overdue crash course in modern infosec practices. And migrating from a dedicated leased server to virtual servers feels like waking up in the future.

After a bunch of research, I decided to abandon dedicated servers entirely and move to a beefy DigitalOcean droplet running Ubuntu 14.04. It’s more powerful than my old server, provisioned instantly, and I’m paying a fraction of the price. DigitalOcean’s admin tools are phenomenal, and backups are automatic and painless.

DigitalOcean’s tutorials are absolutely incredible, and I found them invaluable in initial setup, securing Ubuntu, my firewall, MySQL, and using Fail2Ban to protect Apache and SSH. There’s still more work to do for monitoring intrusions, but it’s a start.

So, all of that sucked. But, while bittersweet, I’m better and stronger for it.

Thanks, “Ivan.”