My father passed away on January 24, less than two weeks ago, while my family was still reeling from the news that he had developed Stage IV lymphoma.

I’ve never talked about my dad here before, mostly because I don’t write often about my personal life, but I’ve made exceptions in the past to commemorate and honor the deaths of people who meant a lot to me.

On Saturday, we gathered together at the Catholic church in Burbank where my parents were married and my grandfather’s funeral was held, and we said goodbye to my dad, Stephen Baio.

At the service, I read the eulogy below, which tried to explain why he was special to so many of us. Writing it was easy, at least in part because he wrote the best bits himself. The hard part was reading it.

There are three words I keep hearing over and over again since my dad passed away, when I’m talking to people who knew him — some I know well, some who haven’t seen me since I was a little kid, and some of you that I’d never met before.

Those three words are, “Everyone loved Steve.”

It’s true, everyone loved Steve. To know my dad was to love him.

Stephen Baio was impossible not to like. He lit up a room with a joyful and contagious enthusiasm — sweet and sincere, and genuinely funny. He was unabashedly silly. He laughed at his own jokes and at yours. He made it very easy to love him.

He was generous to everyone he met, whether it was someone he knew for 30 minutes or 30 years. To me, it always felt like he had a hard time saving money because of how much he loved spending it on others.

My dad made people happy, and as long as you’re making yourself and other people happy and you’re not hurting anybody, who cares what anybody else thinks? You’re probably doing the right thing.

My dad taught me the importance of being yourself. Being true to who you are. He taught me the values of loyalty, friendship, and kindness.

And these weren’t lessons that could be spoken: he taught me by how he lived his life, it was central to how he walked through the world, and how over time, you change it by who you touch along the way, in ways big and small.

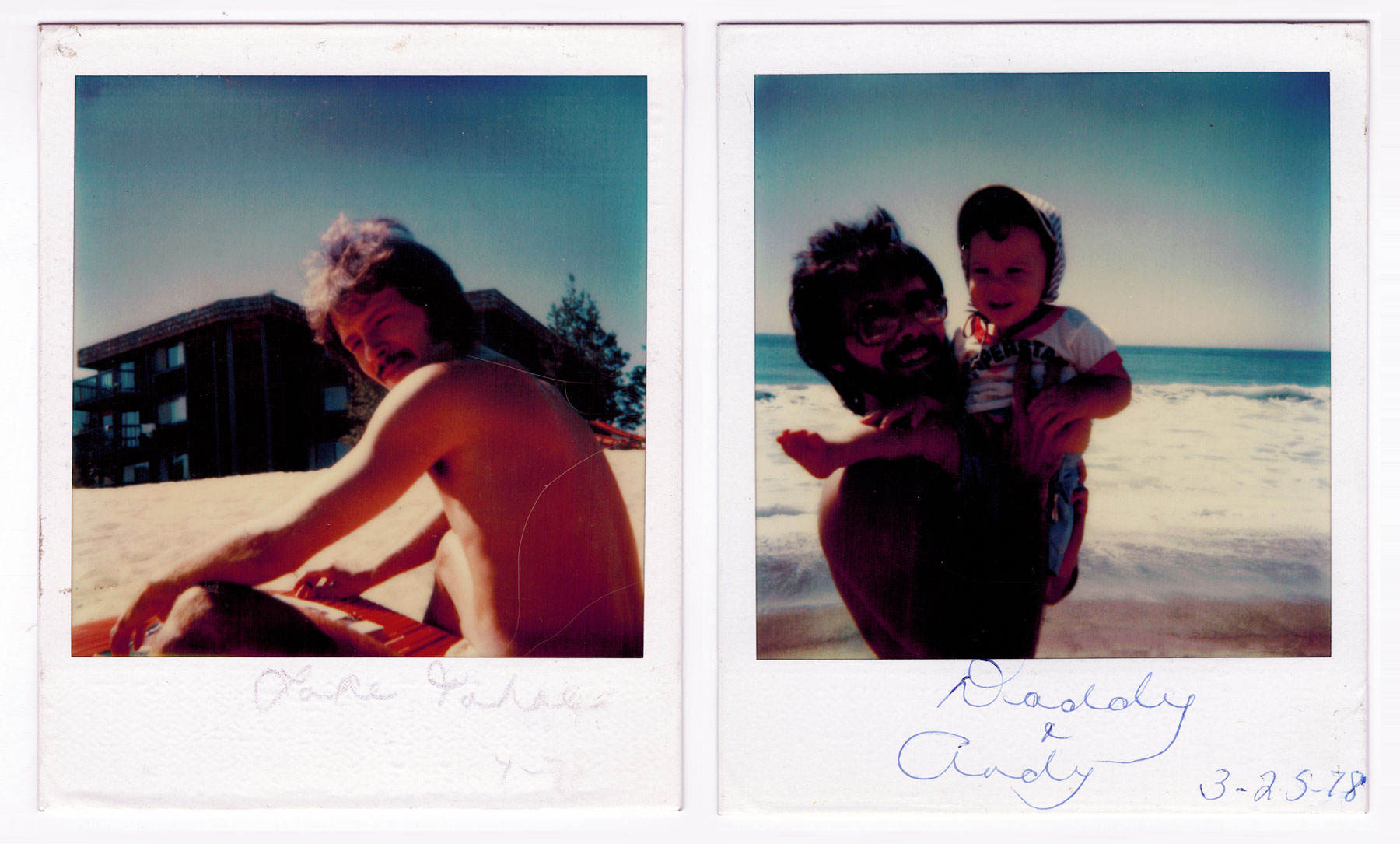

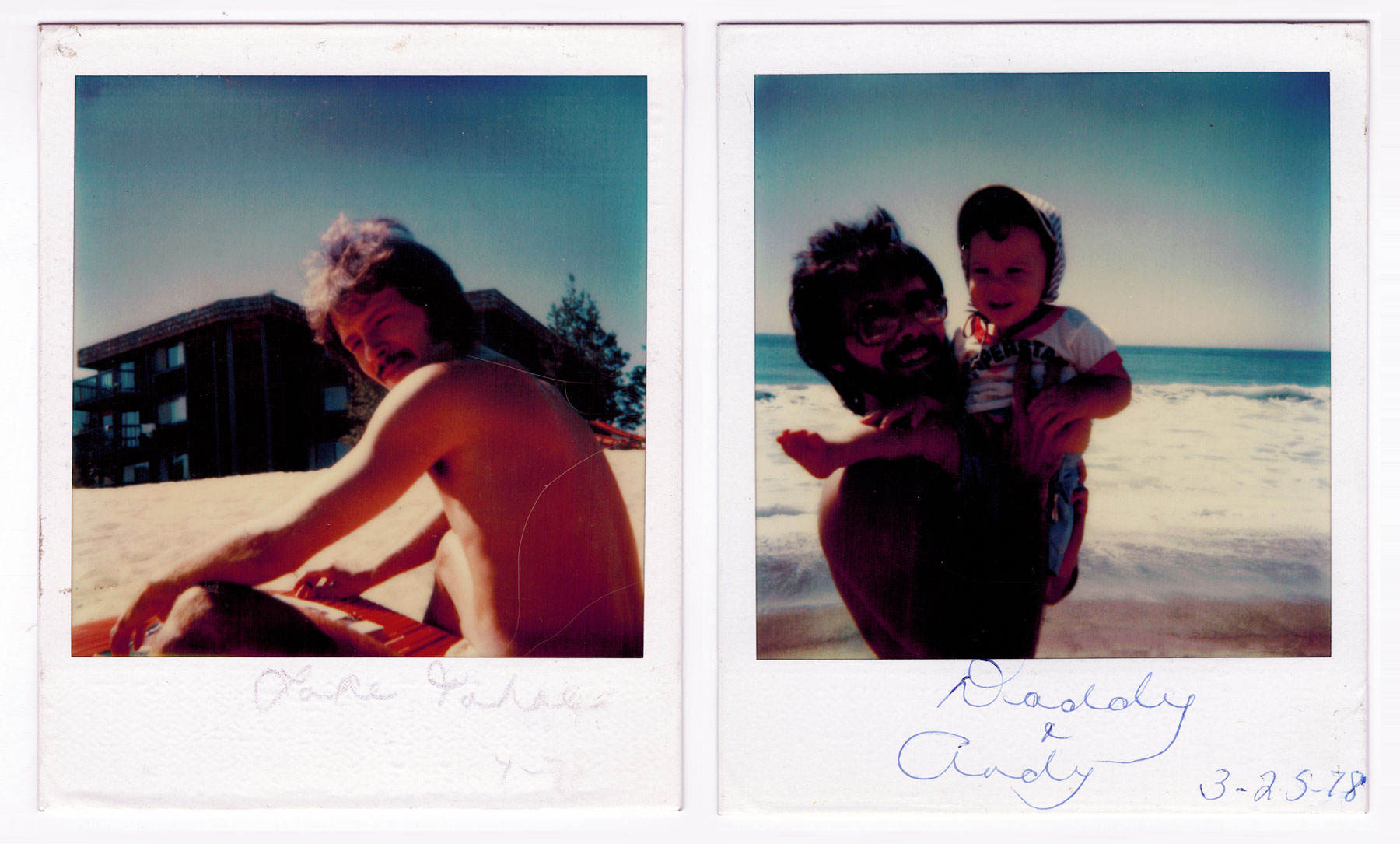

After he passed away, I went into his bedroom to find some photos for this memorial service.

And in one big box, I found photos from his entire life. Photos from his childhood with his mother, Teresa, and with his brother, Anthony, and his late sister, Nicolina, my Aunt Nicki.

I found photos with old friends in the ‘70s, camping in the mountains and just goofing around. Photos from his prom, from parties, from his wedding and with my mom. So many from my childhood, going to Disneyland and the beach, dressing up for Halloweens, Christmases, and Easters long ago. Photos from the tours he went on, traveling with bands or shooting photos of monuments in Tokyo. And later, photos from my own wedding, photos with his grandson.

But after that, I found three more big boxes of the same size, and I was surprised to see them stuffed full of every greeting card, postcard, letter, and memento he ever received. Clippings from newspapers, birth announcements and obituaries, matchbook covers and cassette recordings of long-forgotten conversations. Piles of memories spanning decades, immaculately organized into dozens of manila envelopes, filled to bursting.

It was such a clear reminder how much every one of your friendships and relationships meant to him.

Everyone loved Steve, and you should know without any question or doubt, that he loved you all back.

Seven years ago, my Dad wrote a long handwritten letter, one of thousands he’d written in his life. But this one was special, it was only meant to be read in the event of his passing.

I read it for the first time the day after he died, and I’d like to read from it now.

To Whom It May Concern,

I guess this would be considered my last Will and Testament.

Anyways, whatever it is, these are my wishes, for things to be done whenever I’m gone.

I’m hoping there’s not a lot of things to ask for, I don’t want to be too much of a pain in the ass.

So here goes, I’m writing these things down as I’m writing and thinking.

He goes on to talk about how he wanted his personal belongings distributed or donated. He talks about how he wanted to be cremated, and how he wanted his ashes scattered in the High Sierras, up by Olancha, Mammoth, and June Lakes, places that he loved so much.

He said that he didn’t want an expensive funeral, just a memorial with all of his friends and family through the years — all of you — to come together and have what he called “a nice party.”

He wrote, “I want all the people who meant a lot to me to be there. I’m sure you’ll have a good time.”

I’m going to give my dad the last word, by reading from the end of his will, a message that he addressed directly to all of you — the people who meant so much to him in his life. Here’s what he wrote:

Well, I think that’s about it for now. If I think of anything else, I’ll let you know. (Ha Ha.)

I’ll sure miss you a lot, more than you’ll ever know.

Maybe I’ll see you where ever God decides to send me. I hope there’ll be fishing and pretty girls, good music, my Mom’s Italian spaghetti, and beer.

Thank you all for everything, your love, your friendship, good fun, good laughs and memories, and for the pleasure just to know each and every one of you.

With all my love, now and forever.

Bye for now,

Me

Stephen

Thank you.